Why You Need an Ingress Controller

When you deploy workloads to Kubernetes, each service typically runs inside a private cluster network. To expose your applications to the outside world, you need a way to route HTTP/HTTPS traffic into your cluster — and that’s where an Ingress Controller comes in.

An ingress controller acts as a smart traffic manager:

- It listens for requests coming into your cluster.

- Routes them based on rules you define in Kubernetes Ingress resources.

- Handles SSL termination, path-based routing, and even load balancing.

Without one, you’d have to expose each service individually via LoadBalancer or NodePort, which quickly becomes messy, expensive, and hard to manage.

Why NGINX?

There are many ingress controllers available — from cloud-native options like Azure Application Gateway and Traefik, to enterprise solutions like HAProxy or Kong. For the full list, see the Kubernetes Documentation

However, NGINX Ingress Controller remains the most popular starting point because:

- It’s simple, open-source, and well-documented.

- Works seamlessly on any Kubernetes cluster

Preflight Steps

Before starting, I’m going to assume a couple of things (Crazy of me right?!). Firstly that you’ve got some common packages already installed on your local machine, the likes of:

- Microsoft.AzureCLI

- Microsoft.Azure.Kubelogin

- Kubernetes.kubectl

- Helm.Helm

- WSL (If you want that Linux feel)

Windows Install

If you’re more Windows focused we can use WinGet to get you setup, If you’re missing those packages

WinGet CLI Install

If you’re missing WinGet CLI from your system, Here you go 🫡

| |

| |

Please close and reopen your terminal to refresh the environment variables.

After the relaunch, Authenticate and Log into your Azure Kubernetes Cluster

Helm Chart Installation

For more information you can check the official docs over at: ingress-nginx

Internal Load Balancer

| |

External (Public) Load Balancer

| |

Helm Command Breakdown:

| Flag / Parameter | Purpose | Example / Explanation |

|---|---|---|

helm upgrade --install ingress-nginx ingress-nginx | Installs the NGINX Ingress Controller if it doesn’t exist, or upgrades it if it already does. | Ensures the release name is ingress-nginx, making it idempotent for redeployments. |

--repo https://kubernetes.github.io/ingress-nginx | Points Helm to the official NGINX Ingress Controller chart repository. | Ensures you’re always using the maintained upstream chart from the Kubernetes project. |

--namespace ingress-nginx | Installs the controller into its own namespace. | Keeps ingress components isolated from workloads and improves manageability. |

--create-namespace | Creates the namespace automatically if it doesn’t exist. | Saves you from running a separate kubectl create namespace ingress-nginx command. |

--set controller.replicaCount=2 | Runs two replicas of the ingress controller. | Provides high availability and resilience — one pod can restart without downtime. |

--set controller.service.annotations."service\.beta\.kubernetes\.io/azure-load-balancer-internal"="true" | Configures Azure to create an internal Load Balancer instead of a public one. | Keeps ingress traffic private within your virtual network — ideal for internal apps. |

--set controller.service.annotations."service\.beta\.kubernetes\.io/azure-load-balancer-health-probe-request-path"="/healthz" | Sets the HTTP path Azure Load Balancer will probe for health checks. | Azure uses this endpoint to verify if ingress pods are healthy and ready. |

--set controller.config."health-check-path"="/healthz" | Updates the NGINX Ingress Controller configuration to expose /healthz. | Ensures the health probe path actually exists inside NGINX and returns 200 OK. |

Why This Matters

This configuration ensures:

- The Ingress Controller is deployed privately within your AKS virtual network, keeping traffic internal and secure.

- Azure Load Balancer can correctly detect pod health using the

/healthzendpoint, allowing for automatic failover and reliable traffic distribution. - The setup is production-ready with redundancy (2 replicas) and proper health probes.

Running two replicas of the ingress controller is important because it provides:

- High Availability: If one pod fails, upgrades, or is rescheduled, the second replica continues handling traffic — preventing downtime.

- Rolling Updates Without Disruption: During Helm upgrades or AKS node maintenance, at least one controller remains online, maintaining active connections.

- Load Distribution: With multiple replicas, incoming requests are balanced across pods, improving throughput and reducing latency spikes.

- Resilience to Node Failure: If a node hosting one replica becomes unavailable, the other replica on a different node ensures ingress continuity.

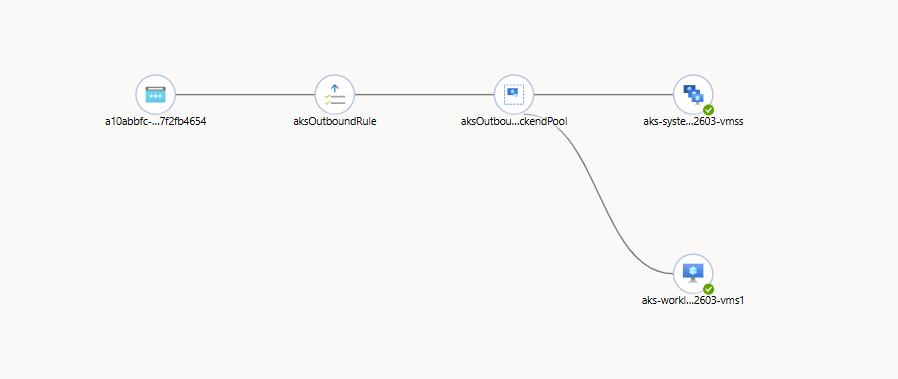

Connectivity Check

If you want to check that the Nginx Ingress pods are working and reporting, check the Load Balancer Health Probe overview. If everything is configured correctly you should see green health indicators on the backend pools

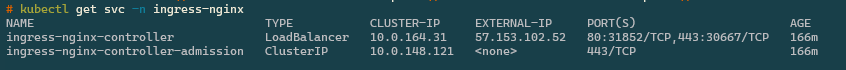

Finally we can check the local services (svc) within the aks cluster

| |

Example Nginx Deployment

So we have a working ingress controller 🥳, Now let’s deploy a basic Nginx backend and configure some connectivity for now over port 80 (HTTP). Don’t worry, TLS is coming in another post soon 😉

| |

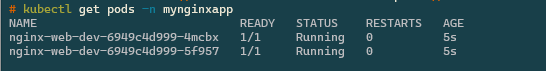

Once this has deployed we can check the namespace to see the two Nginx web servers.

| |

Service

What is a Service?

A Kubernetes Service provides a stable network endpoint for a group of Pods, ensuring reliable communication even as Pods are created or replaced. It automatically routes traffic to healthy Pods using label selectors and can expose applications internally or externally depending on its type. In essence, a Service decouples dynamic Pods from how clients connect to them. Learn more in the official Kubernetes documentation

| |

Save this as svc-mynginxapp.yaml

| |

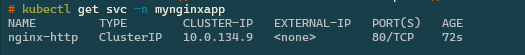

Give it 30 seconds to apply and configure and we can check with.

| |

Ingress

What is an Ingress?

A Kubernetes Ingress is a resource that manages external access to applications running inside a cluster, typically over HTTP or HTTPS. It acts as a smart entry point, routing incoming traffic to the appropriate Service based on defined rules such as hostnames and paths. With an Ingress Controller (like NGINX or Traefik), you can handle features like TLS termination, path-based routing, and load balancing without exposing every Service individually. Learn more in the official Kubernetes documentation

| |

Save this as ingress-mynginxapp.yaml

READER NOTICE

You’ll need to update the host (L10), Nginx does DNS name routing, So otherwise you’ll get 404 Web page.

If you need the Public IP from the ingress controller you can run:

kubectl get svc ingress-nginx-controller -n ingress-nginx

| |

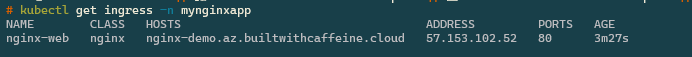

Once deployed we can check that the ingress is online and healthy.

| |

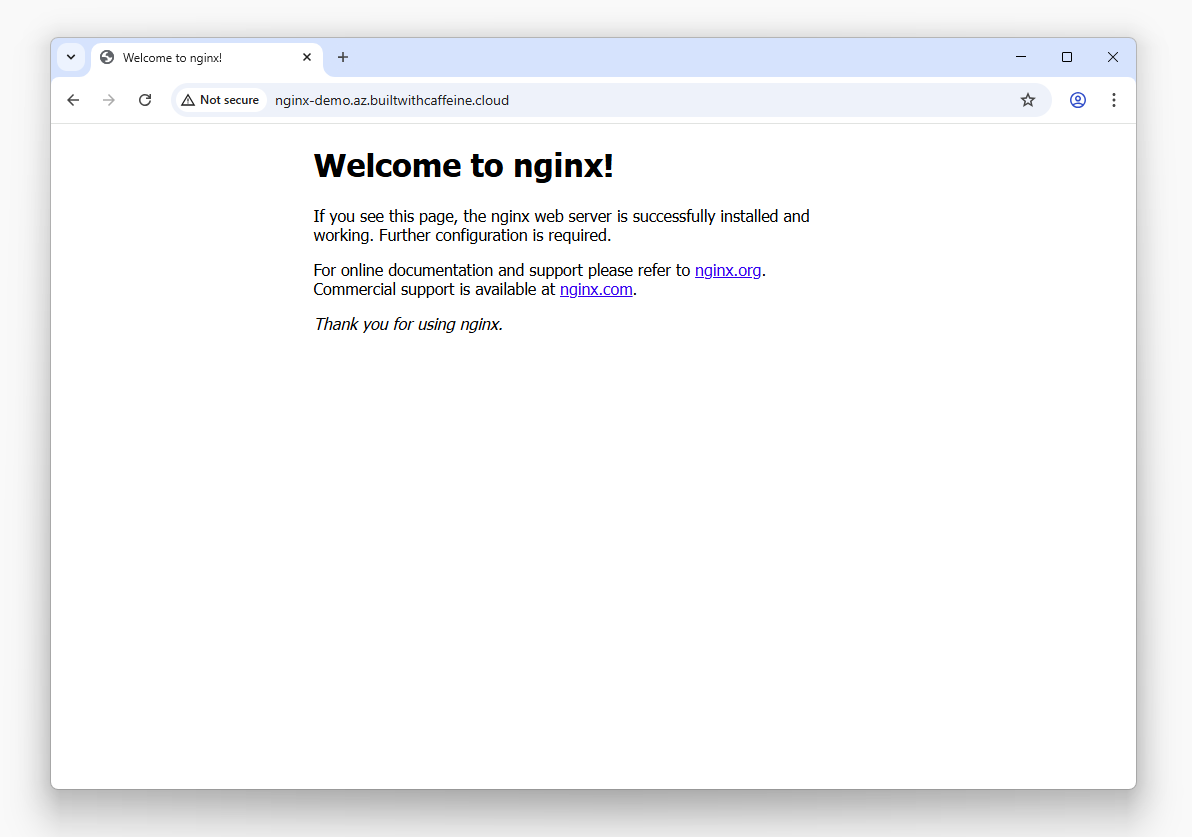

Nginx Connectivity Check

Wrapping Up

With that, you’ve successfully deployed an NGINX Ingress Controller on Azure Kubernetes Service (AKS) and configured a working HTTP-based demo app — complete with a Service and Ingress route.

You’ve just covered some key building blocks of Kubernetes networking:

- Ingress Controllers for routing external traffic into your cluster

- Services for stable internal connectivity

- Ingress Rules for host and path-based routing

This setup not only helps you understand how traffic flows within Kubernetes but also lays the foundation for production-grade networking patterns — where TLS, DNS automation, and certificate management come next.

In the next post, we’ll take this a step further by integrating Cert-Manager and Let’s Encrypt to enable HTTPS/TLS termination and secure your endpoints automatically.

Connectivity Check Tip!

If everything’s green and reachable — congratulations!

You’ve built a fully functional ingress path into your AKS cluster. 🎉